The Data–AI Value Chain is Broken

But not beyond repair

It’s no longer controversial to say most organizations are struggling to realize value from their data and AI investments. What’s more surprising is how consistent the root cause is. Despite differences in sector, size, and strategy, the pattern holds: disconnected data systems, unclear ownership, shallow governance, AI pilots that never scale, and a final mile to business value that remains elusive. If you're a tech professional, you’ve seen this firsthand. The promise is there, but the payoff lags.

The framework that helps explain and fix this gap is the Data–AI Value Chain. I’ll be unpacking each stage of the value chain, exposing its sticking points, and reveal why increasing efficiency at any stage won’t automatically reduce complexity or resource demand.

What Is the Data–AI Value Chain?

Its a four-stage framework that reflects the transformation of raw data into actionable business value:

Data Integration: Connecting disparate data sources and creating a unified data foundation

Data Governance: Ensuring data quality, security, and appropriate access

AI Implementation: Deploying AI capabilities that leverage the unified data foundation

Value Realization: Measuring and capturing the actual business impact of data and AI initiatives

Each stage has its own logic, bottlenecks, and breakthrough practices. But instead of needing to perfect any one link, the goal should be to strengthen the entire chain and do so in a way that accounts for how improvements in one area can expose weaknesses in others.

Stage 1: Data Integration

The Problem: Data analysts spend 80% of their time collectively on searching for, preparing, and governing data, leaving 20% of their time for actual data analysis. And it’s not getting better. The data is coming from hundreds (even thousands) of sources. According to a survey by Matillion and IDG, the average number of data sources per enterprise is 400, with more than 20% reported drawing from 1,000 or more. M&A activity, SaaS sprawl, and real-time data flows have made unification more fragile, not more reliable.

Emerging Practice: The solution isn’t more ETL tools. It’s architecture. Unified platforms, metadata-driven data fabrics, and hybrid data mesh models are replacing the “modern data stack” bloat that made integration someone else’s problem. A hybrid approach, a semantic data fabric with domain-owned data products, is proving both scalable and governable.

Insight: Low-code/no-code, metadata-first environments drastically reduce the time-to-integrate and allowing domain teams to contribute data without dependency on central engineering.

Enter Jevons Paradox: As integration becomes easier, organizations integrate more. Instead of consolidating systems, they connect more of them. This results in more data pipelines, more complexity, and higher cognitive overhead. Efficiency doesn’t reduce demand, it shifts the bottleneck downstream.

Stage 2: Data Governance

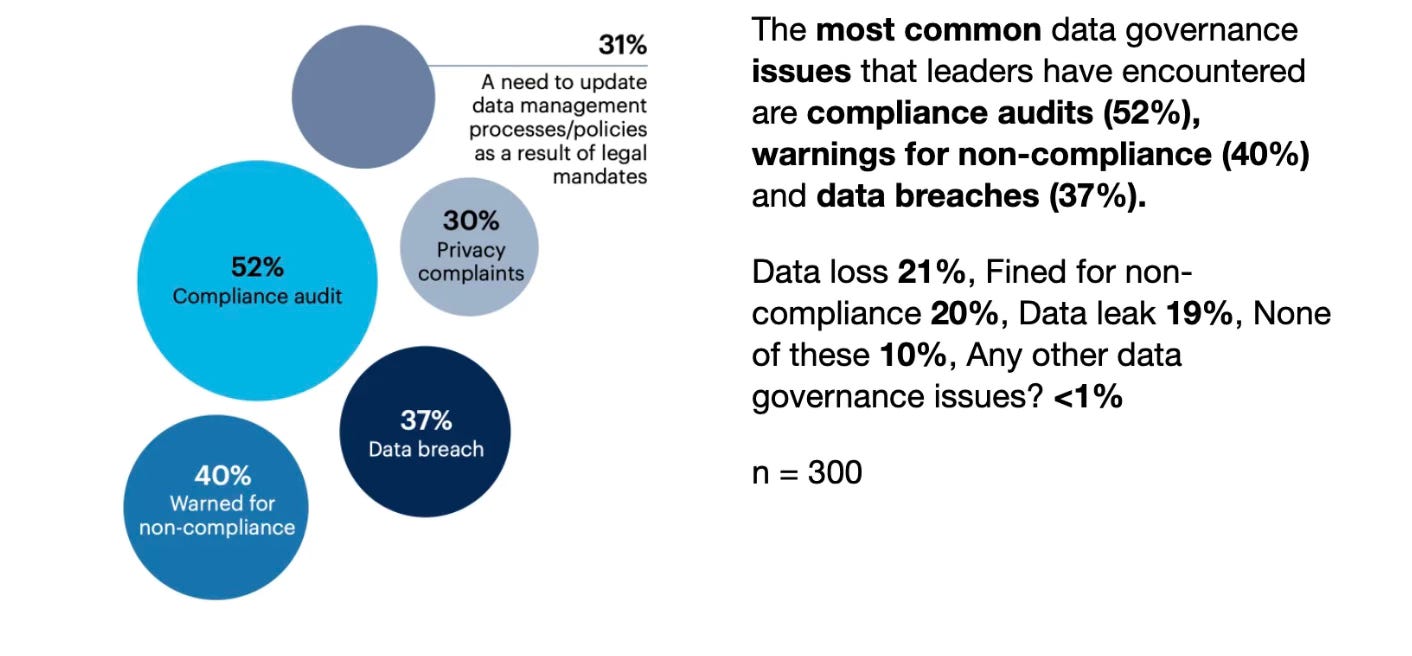

The Problem: AI projects fail when the data can’t be trusted. In one survey, 77% of data leaders said data quality issues were discovered by business users, not via automated checks or governance tools. Gartner reported that the most common data governance issues are compliance audits, warnings for non-compliance and data breaches.

Emerging Practice: Governance today has become a product requirement. Companies that build centralized metadata layers, implement federated governance models, and use purpose-based access controls are seeing faster adoption and fewer data incidents. AI-ready governance now includes bias detection, lineage tracing, and model accountability frameworks.

Insight: The shift toward active metadata, where context flows from integration into governance, is key to building trust at scale. Transparency in process builds organizational confidence in the output.

Jevons Applies Again: Improved governance lowers the friction for more teams to use more data. But as trust increases, demand does too. And that demand isn’t always predictable. The paradox is that stronger governance invites wider access, which can strain governance systems unless they’re automated and elastic.

Stage 3: AI Implementation

The Problem: Most AI lives in company slide decks, not systems**.** Only ~26% of companies have the capabilities to move AI beyond proof-of-concept to real value generation. Model sprawl, talent gaps, and missing MLOps infrastructure stall progress. Even when prototypes succeed, productionizing them is slow and brittle.

Emerging Practice: Organizations that scale AI treat it as product development, not experimentation. That means clear prototype-to-production paths, workflow redesigns, model registries, and unified

Insight: There’s a shift from PoCs to production. Success depends less on AI model accuracy and more on how seamlessly the AI integrates into business decision loops and feedback cycles.

The Jevons Tension: As AI implementation becomes easier via APIs, model hubs, and autoML, its usage explodes. But this also increases data consumption, model monitoring demands, and governance burdens. Every successful AI use case invites five more. Instead of reducing workload, efficiency multiplies use cases.

Stage 4: Value Realization

The Problem: Many teams can’t prove that their data or AI investments drive business value. Metrics are often post-hoc or disconnected from outcomes. A 2024 McKinsey study found that more than 80% of respondents say their organizations aren’t seeing a tangible impact on enterprise-level EBIT from their use of Gen AI.

Emerging Practice: Leading organizations are embedding business KPIs into their data and AI programs from the start. Value-based prioritization, feedback loops, and AI observability platforms ensure continuous alignment with business goals. Some firms now operate AI value offices with the mandate to tie models directly to revenue, cost savings, or risk reduction.

Insight: KPI frameworks and continuous feedback loops enable business alignment within data teams, finance, operations, and product functions.

Jevons' Final Lesson: Value realization does not cap consumption. Once a model proves value, demand surges. That leads to requests for new features, new models, and more data. Efficiency at this stage becomes a flywheel, increasing expectations, technical debt, and the need for cross-functional alignment.

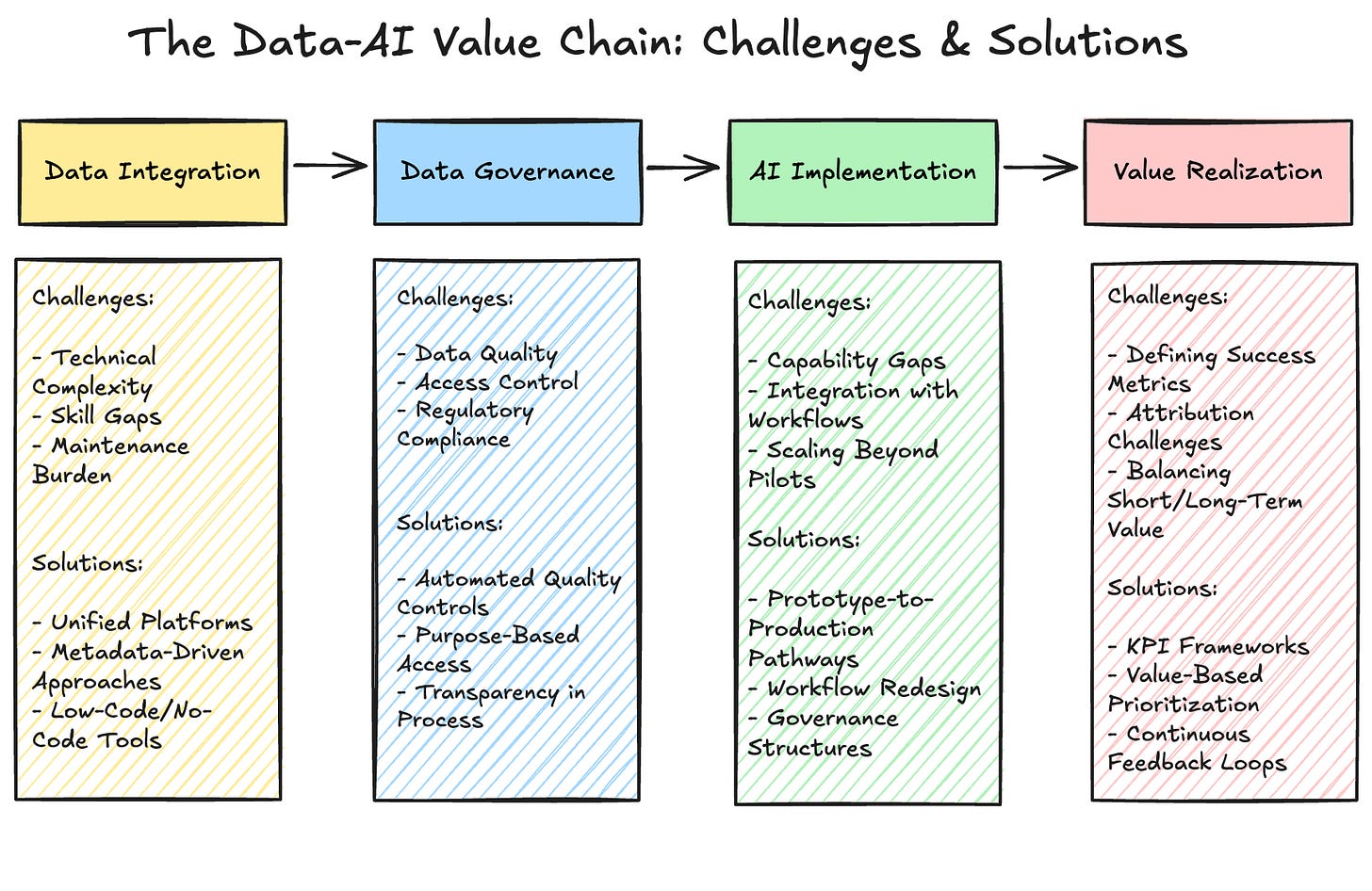

Successful AI implementation and value creation requires addressing distinct challenges at each stage. A holistic strategy is needed to address all four stages.

Organizations must navigate specific challenges at each stage while implementing corresponding solutions to progress effectively through the Data-AI value chain.

Evolve or Loop

The lesson across all four stages is clear: efficiency without orchestration doesn’t scale. It gets fragmented and organizations trying to "optimize" each step in isolation fall into pilot trap, i.e. successful demos that never evolve into business-wide impact.

To avoid this, companies should implement and/or refine their Data–AI Value Chain. Some actionable strategies and recommendations:

From Linear Pipelines to Adaptive Loops:

The Data–AI Value Chain cannot be a one-way street. It needs feedback loops from value realization back to data prep, governance updates, and AI retraining. Dynamic orchestration is key.

From Central Ownership to Federated Enablement:

Data mesh and semantic data fabrics are not mutually exclusive. A hybrid model lets domain teams move fast within platform-level guardrails.

From Compliance-Only Governance to Proactive Trust:

Governance should be built for discovery and confidence, not just risk mitigation. This requires metadata automation and integrated model oversight.

From AI Prototypes to Products:

MLOps, model observability, and business alignment are the infrastructure that separates pilots from platforms.

From Efficiency to Value-Centric Design:

Every stage should be scoped by its contribution to business outcomes. That means asking not “Can we do it faster?” but “Should we do it at all and why?”

The Chain Is the Strategy

AI, by itself, cannot transform businesses. Systems can. And the most important system to get right in 2025 is your data–AI value chain.

The biggest risk is misalignment. When organizations fail to connect the dots between integration, trust, AI scaling, and value realization, they accumulate technical debt disguised as innovation. Worse, they amplify demand without control, accelerating costs without outcomes.

But when implemented holistically, with feedback loops, federated ownership, and rigorous value tracking, the Data–AI Value Chain becomes more than a framework. It becomes a strategy. One that’s ready the next AI model, and for the next ten.